《PyTorch官方文档_共1515页》pdf电子书免费下载

下载方式一:

百度网盘下载地址:https://pan.baidu.com/s/1qf97x_PJqLW-H6_72TkTAQ

百度网盘密码:1111

下载方式二:

http://ziliaoshare.cn/Download/af_124191_pd_PyTorchGFWD_G1515Y.zip

|

|

作者:empty 页数:1515 出版社:empty |

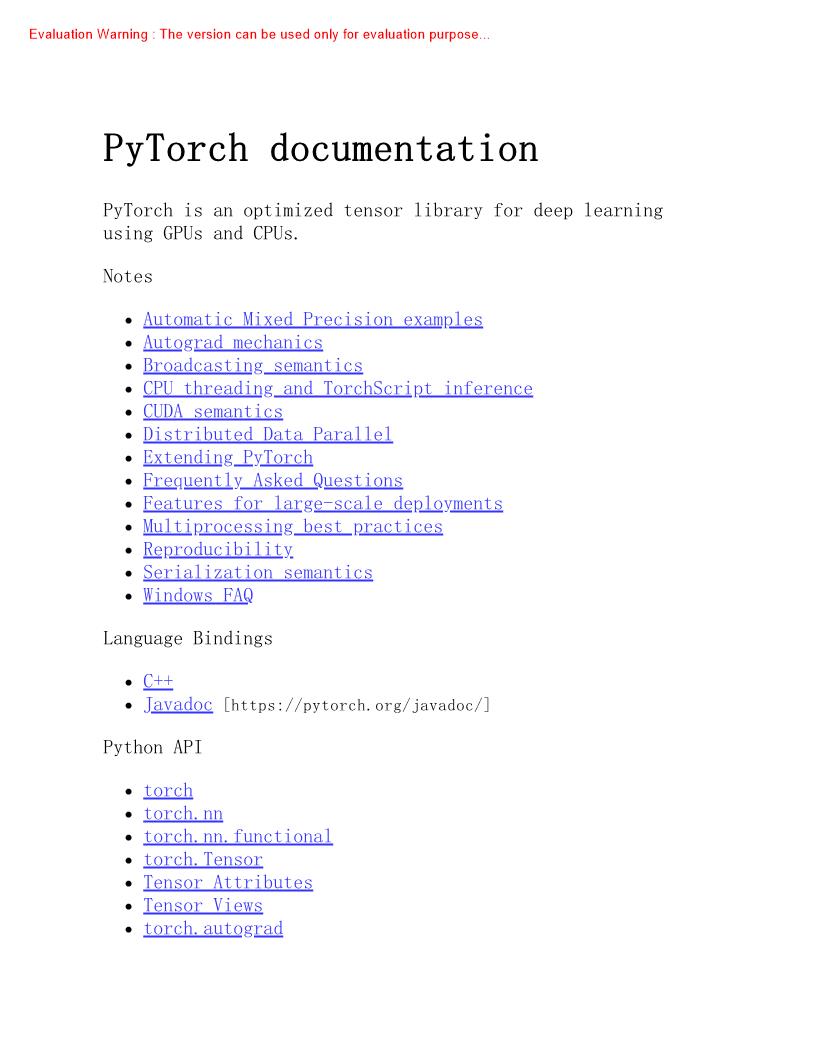

《PyTorch官方文档_共1515页》介绍

torch.cuda.amp.Grad Scaler is not a complete implementationof automatic mixed precision.Grad Scaler is only useful ifyou manually run regions of your model in float 16.If youare n't sure howto choose op precision manually, themaster branch and nightly pip/cond a builds include acontext manager that choose sop precision automaticallywherever it's enabled, See the master documentation[https://pytorch.org/docs/master/amp.html]fordetails.Gradient Scalingo Typical Useo Working with Unscaled Gradientsm Gradient clin pingo Working with Scaled Gradients·Gradient_penaltyo Working with Multiple Losses and OptimizersGradient ScalingGradient scaling helps prevent gradient underflow whentraining with mixed precision, as explained here.Instances of torch.cuda.amp.Grad Scaler help perform the stepsof gradient scaling conveniently, as shown in the followingcode snippets.

for epoch in epochs:All gradients produced by scaler.scale(loss) .backward() arescaled.If you wish to modify or inspect the parameters'scaler.step(optimizer) , you should un scale them first.Forexample, gradient clipping manipulates a set of gradientstorch.nn.utils.clir_grad_norm_() or maximum magnitude(seetorch.nn.utils.clir_grad value_() ) is ( = ) some user-imposedthreshold.If you attempted to clip without un scaling, theyour requested threshold(which was meant to be the thresholdscaler, un scale_(optimizer) un scales gradients held byoptimizer’s assigned parameters.If your model or modelscontain other parameters that were assigned to anotherscaler.un scale_(optimizer 2) separately to un scale thoseCalling scaler.un scale_(optimizer) before clipping enables youscaler records that scaler.un scale_(optimizer) was alreadyscaler.step(optimizer) knows not to redundantly un scalegradients before(internally) calling optimizer.step() .